AprilMAV – Using a Raspberry Pi for Indoor Robot Navigation

There are now many options available for indoor navigation, from devices such as the Intel T265 to UWB or Lidar systems. The objective of this project is to create a (relatively) cheap and accessible indoor navigation system via Apriltags.

Apriltags are well-used in the robotics community and are able to have their pose (position and orientation) relative to the camera calculated.

Aprilmav uses ceiling-mounted Apriltags as navigation markers. A Raspberry Pi will capture and process the images of the Apriltags, then calculate the camera (and thus the attached vehicle) location and orientation relative to the Apriltags.

The positions of the Apriltags do not need to be known in advance. The vehicle will begin at the (0,0,0) location and (0,0,0) orientation. Each detected Apriltag will be given a position and orientation relative to this when first detected. Each subsequent detection of the Apriltag will use the delta position/orientation to determine the vehicle’s movement.

Hardware

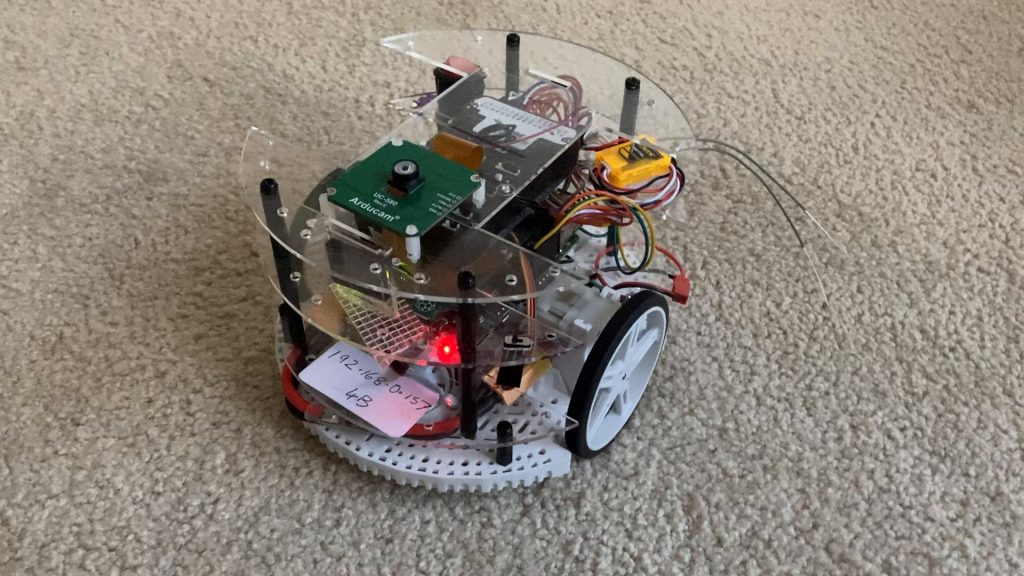

I used the following components:

- Polulu Romi Chassis

- Raspberry Pi 4B

- Pi-Connect Lite

- Arducam OV9281 camera

- Pixhawk flight controller (must have 2Mb flash) running ArduPilot

- DRV8835 Dual Motor controller

The Romi has wheel encoders, which were also connected to ArduPilot for additional velocity measurements.

The ArduCam OV9281 was chosen because it’s a cheap global shutter camera. It’s very important that a global shutter camera is used, in order to reduce image distortions.

The Raspberry Pi Camera was trialled, but gave very poor results due to motion blur.

In terms of the flight controller, any ArduPilot flight controller would work, as long as it has 2Mb of flash. Since I was using brushed DC motors and wheel encoders, I needed 8 PWM outputs (4 for the motors, 4 for the wheel encoders), so the Pixhawk worked well.

Any single-board computer will work, but it should be powerful enough to run the Apritag detection at >5fps. I found that the Raspberry Pi 4B could do this, but not the earlier Pi models.

Software

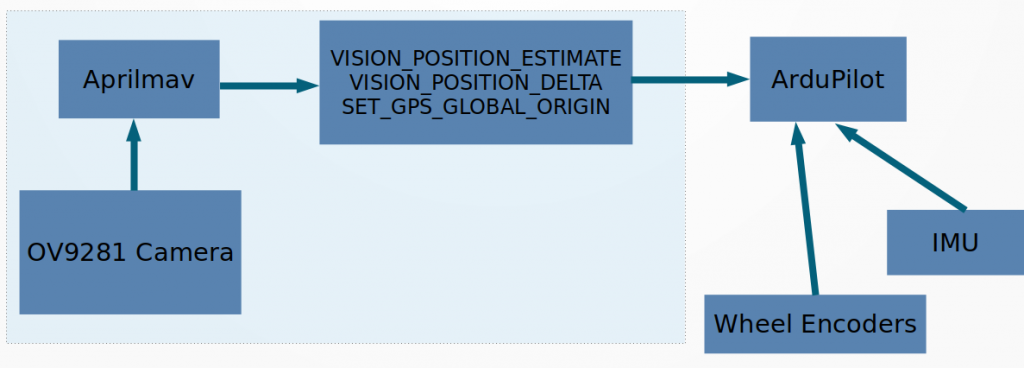

The Raspberry Pi ran the Aprilmav scripts for detection and processing, plus Rpanion-server for telemetry routing.

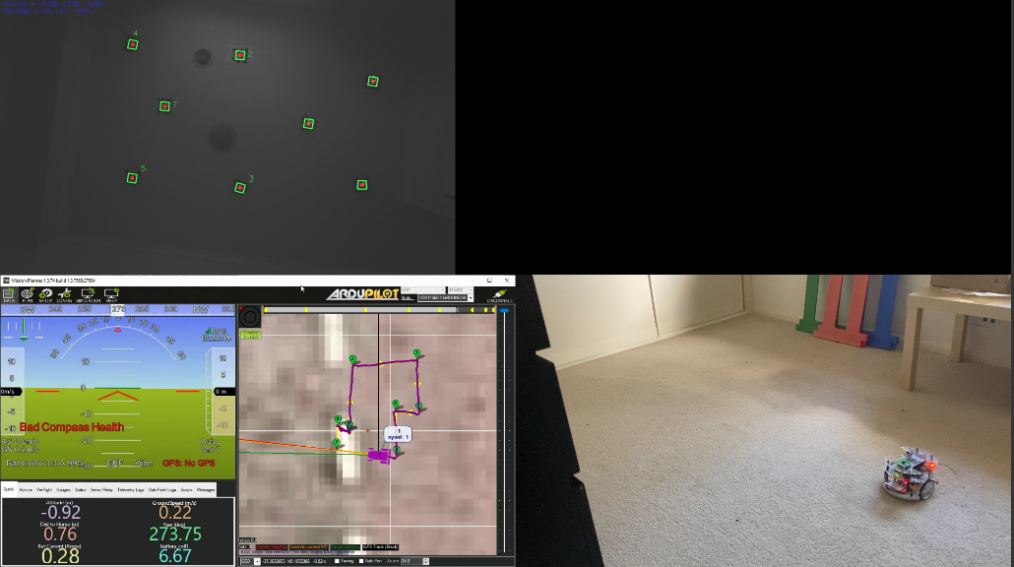

Aprilmav captured images from the camera, detected any Apriltags and then calculated the Vehicle’s position/orientation based on the position and orientation of the tags.

Aprilmav then sent the HEARTBEAT, SET_GPS_GLOBAL_ORIGIN, VISION_POSITION_DELTA and VISION_POSITION_ESTIMATE messages to ArduPilot. These in turn were consumed by ArduPilot’s EKF3 to generate a final position/orientation of the vehicle.

The full Aprilmav source code is at https://github.com/stephendade/aprilmav

Performance

Given the low-cost hardware involved, accuracy was only 10 cm. This was enough for most indoor navigation tasks.

It should be noted the Apriltags do not have to be placed on the ceiling. They can be placed anywhere, but the best accuracy was obtained when the tags were on the ceiling, as the vehicle was always 90 degrees to the tags (Apriltag pose accuracy goes down it the slant angle is <90 degrees).

Additionally, a well lit environment is required, so the camera can clearly see the features of the Apriltags.

In terms of frame rate performance, >5fps is required for a good data feed to ArduPilot.

The use of the wheel encoders on the Romi helped significantly with reducing the lag in position and orientation. This lag was due to the 125ms (approx) processing delay in Aprilmav.

A full video explaining Aprilmav, plus a short demonstration is at https://youtu.be/v4RMZ3AGHIc.